August 11, 2015

What do our Facebook posts really say about us? Some dismiss them as just noise, but several research teams are seriously considering social media as a source of psychological data. A common goal of this work is to discover faster or cheaper ways to measure important but elusive variables, like personality, health, and happiness. While I worked on the World Well-Being Project team, one of our goals was turning the language from social media into useful new measures.

For example, in a study published last year in PLoS One, the linguistic traces of age, gender, and personality were identified in a massive amount of social media language: 20 million status updates from 75,000 Facebook users. Users’ personality traits could be accurately predicted using only the words in their Facebook status updates. This is consistent with several recent studies (1-6) that suggest that statistical algorithms are surprisingly good at profiling our personalities, especially when they are fed psychologically-rich information like the structure of our Facebook social network or our Facebook likes.

Does this mean that algorithms will replace personality questionnaires?

Not quite. In almost every study of this kind, researchers judge the accuracy of their algorithm by comparing it to how people answer personality questionnaires, not their actual behaviors or other psychological measures. This is a good start, because these algorithms should agree with the way people respond to questionnaires, at a minimum. However, solely relying on this kind of validation leaves some important questions unanswered:

Question 1: Do the algorithms just predict how we respond to questionnaires, or do they agree with other ways of measuring personality, too?

You have probably completed at least one personality test that involved answering lots of multiple choice questions about yourself. These kind of measures are what psychologists call self-report measures. Usually, these measures require a respondents to indicate how accurately a phrase describes them (e.g., “I talk to a lot of different people at parties” or “I get irritated easily”).

While self-report measures are very popular, they are just one of many ways to assess personality. Friends, for example, are surprisingly good judges of our personalities—sometimes better than ourselves (7)—because our friends aren’t affected by the same kind of biases that we have when assessing ourselves. Friend ratings also give us a second way to evaluate an algorithm’s predictions.

Question 2: Do the algorithms predict real-world behaviors?

Psychologists care about personality traits because they are excellent predictors of other important everyday outcomes and behaviors (8), like relationship styles, health behaviors, career preferences, etc. So, an algorithmic assessment should also help predict those things, too. For example, if an algorithm predicts that a user is high in extraversion, we should expect that this person will also exhibit other features of extraversion, like having a larger circle of friends.

Question 3: Do the algorithms make consistent predictions over time?

Our personalities traits tend to be very stable from year to year (9), but our language seems to evolve constantly, especially in social media. Think about your own Facebook statuses: are you talking about the same things now as you were six months ago? How about a year ago? A concern with language-based algorithms is that they might be overly sensitive to small, unimportant changes in our word usage. If an algorithmic assessment is valid, it should give consistent results for the same person over time.

In a recent article in the Journal of Personality and Social Psychology, we dove into these nitty-gritty details to see if language-based algorithms can really work as well as traditional assessments. We first developed a prediction algorithm using the status messages from 66,000 Facebook users. We then applied the algorithm to messages from 5,000 separate users and generated predictions of the Big Five personality traits for every user. Lastly, we analyzed several measurement properties of the algorithms to answer the questions above. Here’s what we found:

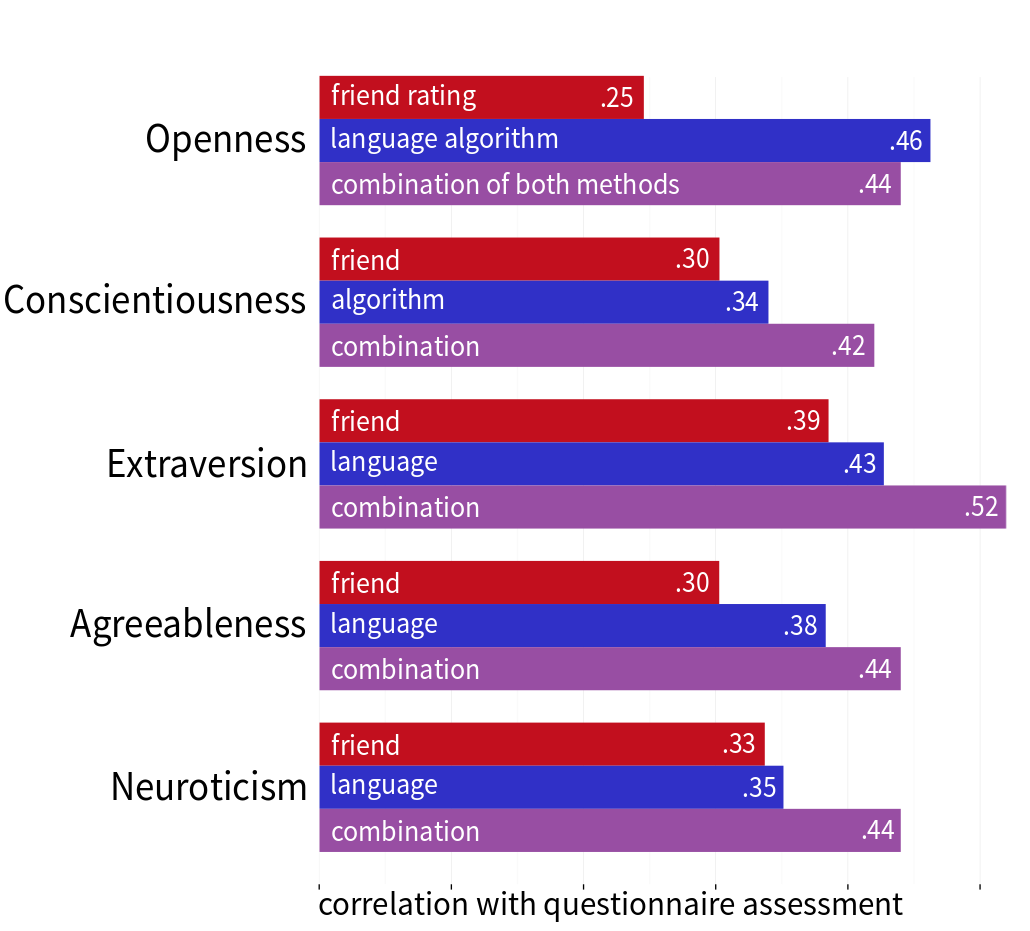

For each trait, we quantified accuracy as the correlation between the algorithms’ predictions and questionnaire measures of each trait. A correlation of 0 means predictions are completely random; correlation of 1 means perfect prediction. The accuracy of the algorithms was quite good: correlations ranged between .35-.46. For comparison, we measured the accuracy of ratings made by the users’ actual friends, people who presumably know them well beyond their Facebook messages. Friends’ accuracy ranged between .25-.39.

In other words, algorithms are roughly as accurate as a typical friend; when rating traits of Openness and Agreeableness, the algorithms were actually more accurate than a friend.

Comparing the accuracy of different personality assessments: language-based algorithms, ratings from friends, and a combination of both methods

Comparing the accuracy of different personality assessments: language-based algorithms, ratings from friends, and a combination of both methods

For most traits, we found that combining the algorithm ratings with friend ratings yielded even more accurate predictions. Practically, this means that similar algorithms might be a useful way for researchers to boost the accuracy of personality assessments. But, more interestingly, it suggests that algorithms and friends see somewhat different sides of us, and each provides unique information.

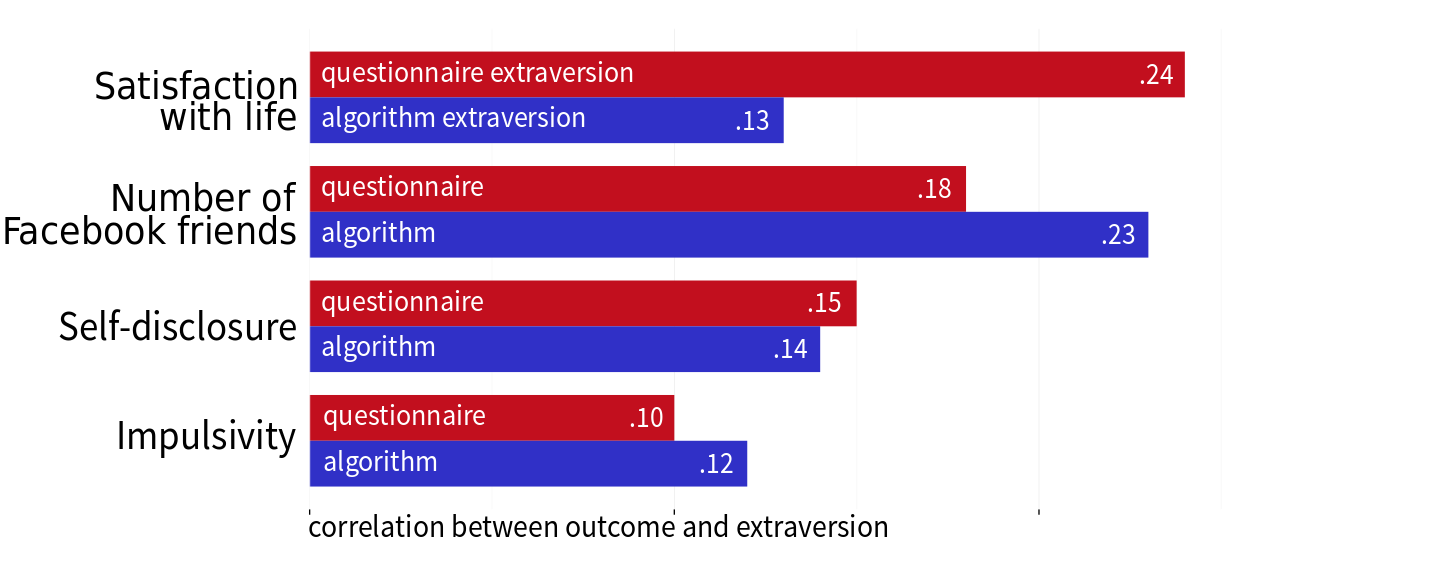

We also compared the predictions to several outcomes with well-known connections to personality. Compared to introverts, for example, extroverts typically report greater satisfaction with life (10), have larger social circles (11), and are more likely to self-disclose or share personal information (12). If an algorithm can truly assess extraversion, it should also predict these extraverted behaviors, at least as well as traditional questionnaires.

Predicting characteristics of extraversion using two kind of extraversion assessments: a language-based algorithm and a traditional self-report questionnaire

Predicting characteristics of extraversion using two kind of extraversion assessments: a language-based algorithm and a traditional self-report questionnaire

For some outcomes, like number of Facebook friends, algorithmic predictions of personality were slightly better than questionnaires; in other cases, as with satisfaction with life, the questionnaires were slightly better. Overall, both methods had very similar patterns relationships with relevant outcomes, suggesting that the algorithms are capturing the right information. We only show a tiny subset of the results here; see Figure 5 and Appendix C in the article for full details.

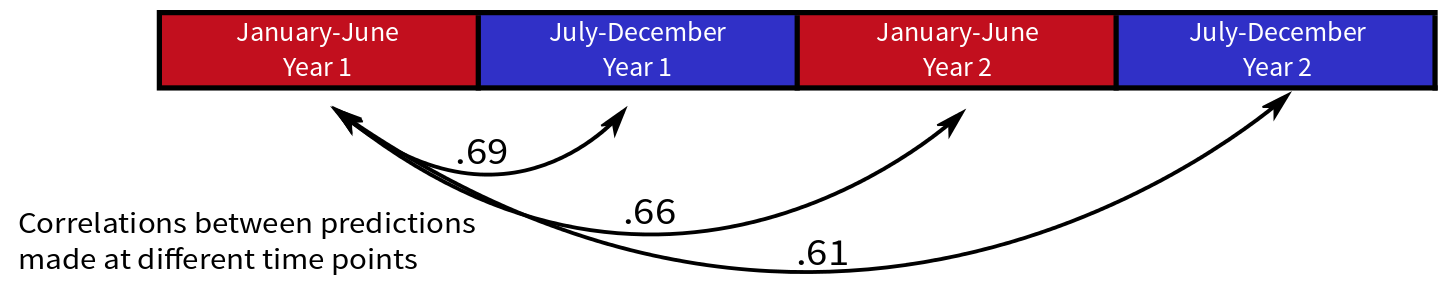

Finally, we examined the stability of the algorithms over time. Because language in social media can evolve so rapidly, we wondered if a prediction for a given person would be the same at later times. To test this, we let the algorithms generate multiple personality predictions for the same people, but we used language from different time points over a two-year period.

For example, we used a person’s messages from January to June to make one set of predictions, then we made a second set of predictions for the same people using messages from July to December, and so on. We then compared the similarity of predictions across different times.

Language-based algorithms make stable predictions over time

Language-based algorithms make stable predictions over time

The algorithms were surprisingly consistent over time, roughly on par with traditional questionnaires (average six-month test-retest correlations were r = .70; see Table 5 and Appendix E in the article for full details). How can a language-based algorithm make consistent predictions if our language is always changing? While buzzwords and memes may come and go, our use of the most revealing language—pronouns, cursing, and emotion words—is very stable.

We found that our language-based algorithms perform much like traditional personality assessments: they agree with questionnaires and friend ratings, they predict the right kind of outcomes, and they are consistent over time. As researchers mine social media and propose new metrics, establishing these basic psychometric properties will be increasingly important. More validation work is needed to understand how to extend these techniques to other areas, like well-being and mental health. Still, this is a promising sign that status messages and tweets aren’t just noise. With the right techniques, they can yield valid personality assessments and, ultimately, insight into who we are.